[Note: this article is mostly a conversation I had with an AI. I likeed the output, so I am including it here. It may not be the most genuine of blog posts, but it is something I will refer back to, so I am sharing it with you as well.]

|

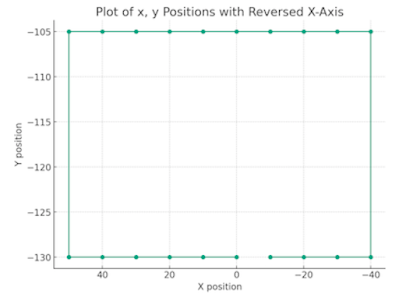

| A Simple SLAM Simulation |

SLAM, which stands for Simultaneous Localization and Mapping, is a critical concept in robotics, especially in autonomous systems where the robot must navigate through an unknown environment. Here’s a detailed breakdown of the basic components and methods involved in SLAM:

What is SLAM?

SLAM is the process by which a robot can:

1. Map an Environment: Create a representation (usually a 2D or 3D map) of an unfamiliar area.

2. Localize Itself: Determine its own position within that map, in real time.

SLAM is a solution to the "chicken and egg" problem of robotics: To map the environment, a robot needs to know where it is, but to know where it is, it needs a map. SLAM solves both tasks simultaneously.

Key Components of SLAM

1. Sensors:

- Lidar: Light Detection and Ranging (uses laser beams to measure distances).

- Cameras: Visual or stereo cameras for Visual SLAM (VSLAM).

- Ultrasonic Sensors: Simple but effective for obstacle detection.

- IMUs (Inertial Measurement Units): Track orientation and movement changes.

- Encoders: Simple but effective for tracking movement changes.

2. State Estimation:

- Pose: Position (x, y, z) and orientation (yaw, pitch, roll) are estimated continuously.

- Common methods for estimating this are Kalman Filters or Particle Filters. For complex non-linear systems, the Extended Kalman Filter (EKF) or Unscented Kalman Filter (UKF) is often used.

3. Mapping:

- The robot gathers spatial information about obstacles and free space.

- Maps are usually grid-based (like occupancy grids) or feature-based.

- Occupancy Grid Mapping divides space into a grid of cells, marking each cell as occupied, free, or unknown.

4. Loop Closure:

- The robot must recognize a location it has visited before, called loop closure.

- Without recognizing these loops, errors can accumulate, leading to an inaccurate map.

- Visual features, like walls or landmarks, are often used for loop closure.

Mathematical Foundations

1. Probabilistic Approach:

- SLAM typically involves Bayesian Estimation. A robot's state is represented as a probability distribution that evolves as new data arrives from the sensors.

- Bayes Filters and Markov Localization are used to manage the uncertainty of sensor data and robot motion.

2. State-Space Representation:

- The SLAM problem can be represented in terms of states and measurements, where states represent robot and feature positions and measurements represent distances and angles to landmarks.

- The sensor model and motion model help update the robot’s understanding of the environment.

Approaches to SLAM

1. Kalman Filter-Based SLAM:

- The Extended Kalman Filter (EKF) SLAM is one of the earliest methods.

- It’s efficient but suffers from scalability issues in large environments due to the computational cost of updating a large covariance matrix.

2. Particle Filter-Based SLAM:

- Also called Monte Carlo Localization (MCL).

- Uses particles to represent possible positions of the robot. It’s highly robust for non-linear problems and can handle multi-modal distributions better than EKF.

3. Graph-Based SLAM:

- The problem is represented as a graph, where nodes represent robot poses and landmarks, and edges represent constraints between them (from sensors).

- After creating the graph, optimization is used to adjust all nodes, effectively minimizing the error in both localization and mapping.

4. Visual SLAM (VSLAM):

- Uses cameras to detect visual landmarks. ORB-SLAM (Oriented FAST and Rotated BRIEF) is a popular implementation.

- Visual SLAM can use either monocular or stereo cameras. Stereo cameras provide depth information directly, while monocular cameras infer depth using techniques like feature triangulation over time.

Mapping Techniques

1. Occupancy Grid Mapping:

- The environment is divided into a discrete grid where each cell holds a probability of being occupied.

- Updates occur using sensor data and can be represented by Bayes’ rule.

2. Feature-Based Mapping:

- Instead of dividing space into grids, the system identifies key features or landmarks (corners, edges, unique patterns) and tracks them.

- It’s computationally efficient in sparse environments.

3. Octomap (3D Occupancy Grid Mapping):

- Uses octrees to create maps that efficiently represent 3D environments.

Challenges in SLAM

1. Sensor Noise: Every sensor has inherent noise that needs to be filtered out.

2. Motion Model Uncertainty: The real-world movements of robots have slippage and drift, leading to deviations between intended and actual motion.

3. Loop Closure Errors: If not correctly handled, identifying previously visited locations can lead to misaligned maps.

4. Scalability: Larger maps become computationally intensive, and optimizing these graphs for large environments requires sophisticated approaches.

Popular SLAM Frameworks and Libraries

1. GMapping: A well-known 2D SLAM algorithm using Particle Filters, often used with ROS (Robot Operating System).

2. Hector SLAM: Uses a Lidar and an IMU, known for high speed and low latency mapping in indoor environments.

3. Cartographer by Google: Supports both 2D and 3D SLAM, popular for its real-time mapping capabilities.

4. ORB-SLAM: A Visual SLAM library focusing on feature-based landmarks, very popular in robotics and augmented reality.

Use Cases in Robotics

1. Autonomous Vacuum Cleaners: These use SLAM to map a house layout and optimize cleaning routes while avoiding obstacles.

2. Delivery Robots: Like those used in Amazon warehouses, where they navigate autonomously through aisles and handle dynamic obstacles.

3. Drones: Drones often employ Visual SLAM to create maps of unknown environments for navigation and avoid collisions.

4. Self-Driving Cars: While more sophisticated, they often employ a form of SLAM to localize the vehicle and build environmental maps for path planning.

Ah... These Use Cases are just the basic ones the AI can share, the low hanging fruit. The Use Cases are much, much, more expansive. All robots and machines interacting in physical space will need some form of SLAM. Once the machine is localized and positions are known, then the applications of the sensors, manipulators, and motion can become effective. This is just the beginning, there are many applications that are yet to be realized or identified!

Advanced SLAM Topics to Explore

- Multi-Robot SLAM: Where a team of robots collaborates to create a common map.

- SLAM with Deep Learning: Incorporating neural networks to aid feature extraction and recognition, which can improve SLAM reliability, especially in challenging environments.

- SLAM in Dynamic Environments: Handling environments where objects are constantly in motion, requiring more sophisticated filtering to identify static versus dynamic features.

Conclusion

SLAM forms the backbone of autonomous navigation, providing a robot with the ability to understand and navigate in its environment. Whether using traditional Lidar-based approaches, visual methods, or newer graph-based optimization techniques, each approach has its own strengths and trade-offs depending on the application scenario.

Moving forward, I will be expanding my Simple SLAM! Simulator to explore the necessary components of SLAM. My interest is (at-least!) two fold:

1) The digitalization of physical space, fusing sensor data to observer and record that in our world that is difficult for humans to perceive in spectrum, space, and temporal dimensions.

2) Develop the concepts around Machine Perception of the physical world.